|

Submitted by , posted on 21 November 2002

|

|

Image Description, by

These screenshots were taken from my undergrad project. The aim of the

project was to have a look at developing a high performance progressive

mesh and explore its application.

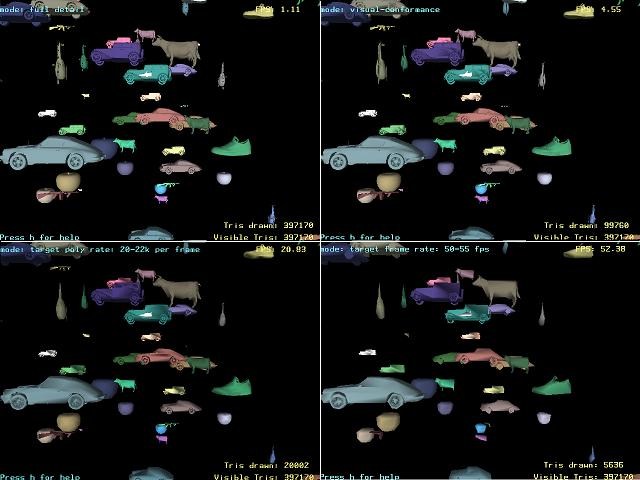

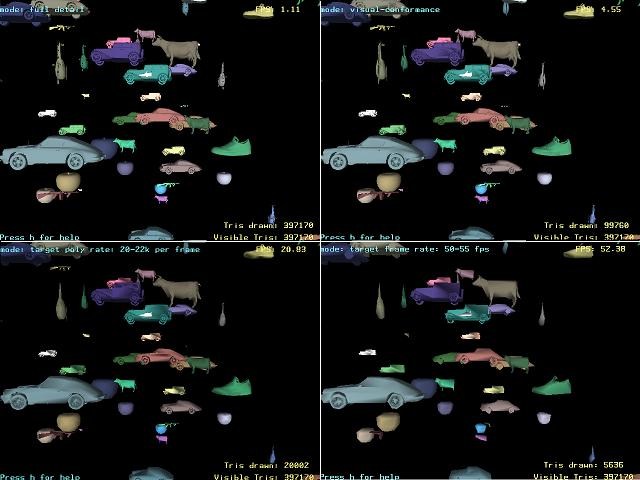

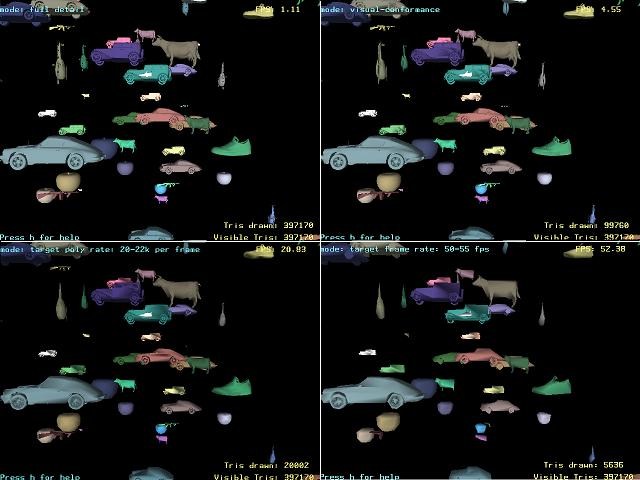

The image shows 4 screen shots. Each shot is of the same scene using a

different mechanism to render it. In the top right of each shot the

frame-rate is displayed. At the bottom right the number of visbible

triangles (frustum clipping) is shown, along side the number of triangles

actually drawn (by the rendering mechanism, labeled "Tris drawn").

The screen shots are taken on a horribly old machine (Pentium 200), and

show how the scene rendered at full detail results in a frame-rate of 1.11

fps (top left).

top right: shows a visually conforming rendition, where only as much detail

is removed as to be imperceptible (4.55 fps).

bottom left: distribution of 20002 polygons accross the scene (20.83 fps)

bottom right: shows a system that reduces detail until the fps reaches

50-55k (52.38 fps)

The program shown uses OpenGL and GLUT, and runs under both windows and

linux (well, most *nix).

The data structure I made basically does not touch the vertex list, and

uses the glDrawElements command to fire the objects at the card. The same

reference mesh is used by multiple instaces of the same object.

The mesh is always at some given LOD (level of detail), and has additional

information that allows it to transition incrementally to any desired LOD.

Each level of LOD has an associated error term that allows us to

intelligently distribute polygons accross the scene giving preference to

details that are more important.

As with standard PMs (Progressive Meshes), this incremental data is stored

on disk in most significant to least significant order.

It was basically an excercise at developing a *FAST* PM, and exploring the

ways of making use of it. The best feature of this whole excercise, in my

opinion, is the idea of scalable games:

Basically, because of the ordering of the data, we only need to read as

much information as we are interested in. This means that we can have a

150mb model on disk, but only read 150k of it. Reading that 150k gives us a

lower detail approximation of the full blown model. We then use this in the

game, because the game does not need much detail. However, in the future,

when they get their Geforce-18, the game can read all of the data......

There are a lot of advanced topics that I didn't get to touch, because this

is a "single subject" project... however I will be returning to do

post-graduate work continuing in this area, so hopefully I'll have some

more things to send you guys.

Things are not clear on copyright, so I won't post code/programs, but if

anyone is interested, let me know...

-Alex Streit

|

|