|

Submitted by , posted on 21 November 2004

|

|

Image Description, by

Hey flipcoders :)

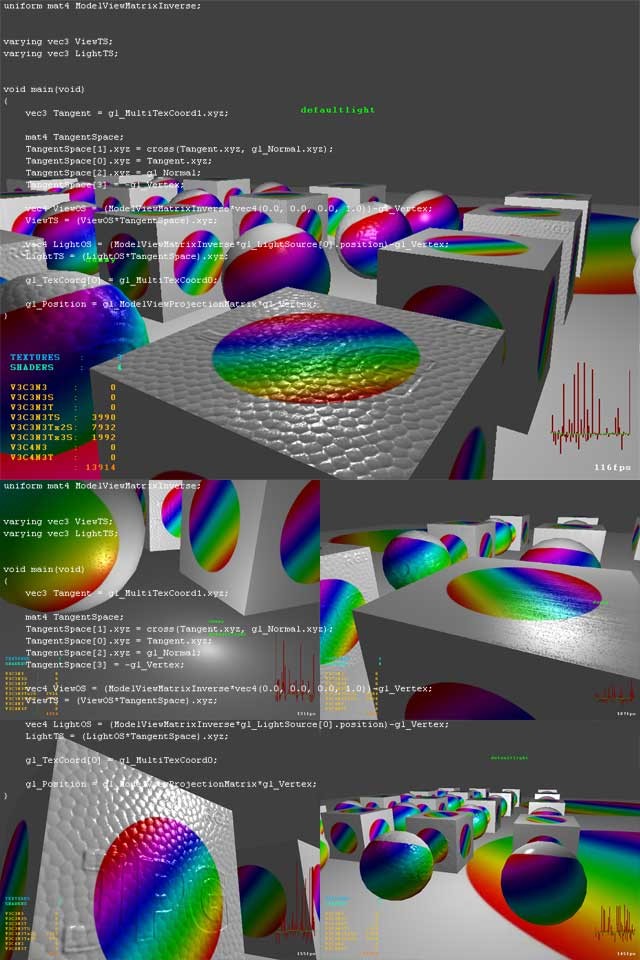

First off... Nice shader, eh? Hehe :)

Been working with my glsl-shaders, and lately I've been focusing on

getting my normal-mapping working. Finally I got it working, on ATI _and_

nVidia cards!

The thing is, that the glsl-implementation still is a bit weird sometimes.

Let me give an example:

I was working a bit on my homecode at work (sorry boss), where I have a

machine with nVidia card. I got some stuff going, using the built-in

matrix-variable gl_ModelViewMatrixInverse... But, when I got home and

tried it out, it didn't even compile. Why? Because ATI haven't implemented

this built-in matrix (yet?).

So, I sat down and implemented stuff at home, using a uniform matrix

instead, computing the inverse modelview on cpu. Worked just fine :)

Then got to work again, and tried it out with the new code. Wtf... Didn't

work at all! It compiled and ran, but the uniform matrix somehow wasn't

copied to the shader.

What to do... I got the very latest oficial drivers from nVidia, and swiz:

Everything looked great :)

So... It's quite important to have new drivers, when working with glsl, as

they're updated constantly.

I've also been running 3dlabs' parsertest on my 9800PRO, my fx5900

ultra at work - both with the latest drivers.

Thought that it might be of interest to other glsl-developers... It came as

a big surprise to me, that the nVidia drivers don't support anywhere

near the amount of ATI.

I'm not really sure if this parsertest is reliable, but if it is: I'm

happy about my 9800 from ATI.

Have a look here:

http://steinware.dk/glsl_logs/9800pro.html

http://steinware.dk/glsl_logs/fx5900ultra.html

Please comment with any experiences you may have had using glsl :)

- Stein Nygaard | http://steinware.dk

|

|