|

Submitted by , posted on 19 October 2001

|

|

Image Description, by

I guess this is just as much a public announcement as it is an IOTD. So if

you're not interested in any announcments I might be inclined to make,

then feel free to ignore the text and enjoy the pic.

I've been working on some radiosity code lately. I've paid a lot of

attention to accuracy and correctness. In the process, I found myself

comparing my results to the original cornell box results.

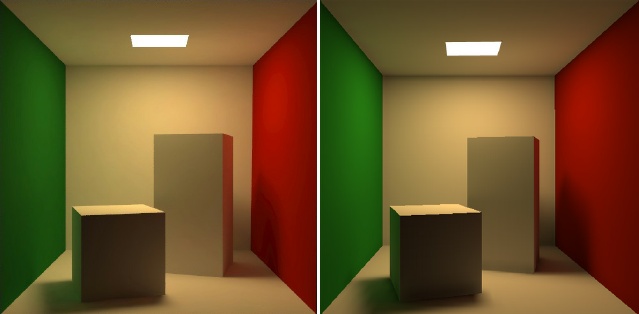

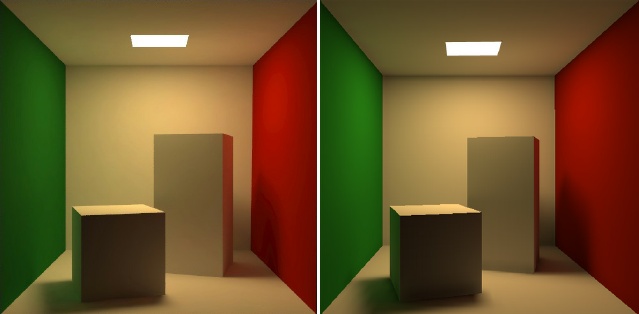

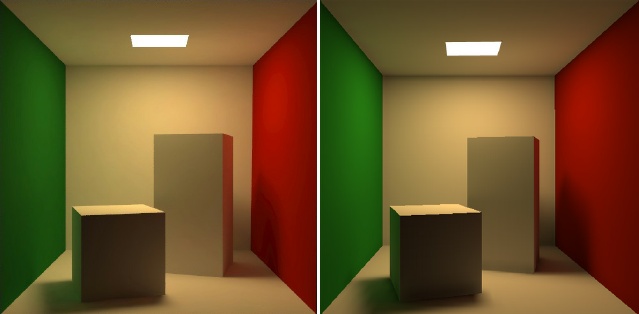

On the left, you'll see the original cornell box image (as generated at

Cornell University) and on the right, you'll see the one generated by the

new radiosity processor I've been working on.

If you look closely, you'll see that the images are not exact. This is for

a few reasons. First, the original cornell box was not processed with RGB

light, but rather using a series of measured wavelenghts. So I guessed at

the RGB values and surface reflectivities when I generated my image. I

also didn't bother matching their camera position and FOV -- I just

"moused" it. :) The image on the right was post-processed with a gamma

value of 2.2 -- no other post-processing was used. Finally, the image on

the right is rendered using lightmaps.

I don't know how long it takes to generate the original cornell box, but

it takes me about 12 seconds on my 1Ghz P3. It required over 1200

iterations to reach a convergence of 99.999% (theoretically, you'd need to

run an infinite number of iterations to reach a full 100% convergence.)

If you're impatient, a very reasonable result (negligible difference to

the one above) can be had in just a couple seconds.

About the tool:

It's also a lightmap generator/packer; it will generate tightly packed

lightmaps for an entire dataset and generate proper UV values for the

lightmaps. In other words, you want lighting for your game, you just hand

your geometry to this tool, and it spits out your lightmaps and UV values.

A lot of new concepts went into the development of this tool:

No use of hemicubes or hemispheres; uses a completely analytical

solution with a perfect visibility database generated by a fairly

well optimized beam tree. There isn't a single ray traced in the

generation of the image above.

Geometry is stored in a clipping octree, and at each octree node, a

clipping BSP tree is built. The BSP uses the a new generation technique

that has proven to vastly improve the speed of building the BSP as well

as great reduction in tree depth and splits. From this, we perform

the radiosity process on the precise visible fragments of polygons.

New adaptive patch technique which is anchored to the speed of the

processing itself. As with all progressive refinement radiosity

processors, the further along you get, the slower it gets. This

adaptive patch system is keyed to the progress, and almost keeps the

progress running at a linear speed. This trades accuracy for speed,

but only when the amount of energy being reflected is negligible.

This is also completely configurable.

Other accuracy options, including using the actual Nusselt Analogy

for form factor calculation (about 5% slower, much more accurate.)

Also, for accuracy, every single light map texel is an element, and

it gets this almost for free. :)

I'm considering doing a 12-week course at GameInstitute.com on radiosity

somtime in the future (if they'll have me :). The course will most likely

include this technique as well as other common techniques. It will

probably also cover lightmap generation as well as the other techniques

(new and old) employed in this tool.

At some point, I also hope to release full source to this tool to the

public.

|

|