|

Submitted by , posted on 24 June 2002

|

|

Image Description, by

The image above shows some pictures of my high-school robotics project.

A team of three, we were given complete freedom in designing and

building our final project.

Although most of the students in our class had decided to stick to the

popular concept of a robot, we chose to design a rather unconventional

robot: Instead of having one robot-unit, consisting of a censor,

processing unit and vehicle - we decided to disunite our system to

several independent modules and components.

The first component is the processing unit. We used a standard personal

computer for this, while our only alternative was a simple processing

unit, based on a semi-assembly language. Using the simple processing

unit meant no means of debugging, no high-level programming language

and, most importantly, a tight limit on RAM memory. Obviously, using a

personal computer was a wise decision - We used C++ as the programming

language and MSVC as our IDE and debugging environment.

We had two hardware modules in our project. The first is a toy car

(Figure 2) with a remote control (Figure 1), which served as our

vehicle-component. Since our main project goal was developing the

algorithm itself, we decided to avoid mechanical work as much as

possible. Accordingly, we didn't construct the car ourselves, but

purchased a toy car. We disassembled the electronic part of the remote

control, and connected it through electrical relays to a parallel-port

cable. We then used the WinIO driver to access the parallel port, and

control the car's actions. After a lot of work on this component, we had

a fully extendable and usable library, which controlled the car's

movement and rotation.

The second hardware module is our censer-component, which is actually a

cheap webcam (Intel EasyPC Camera, Figure 4). Again, we had to write

some code in order to get access to the streaming picture from the

camera. We attempted using libraries such as MS WIA and Twain, but ended

up using Intel's free OpenCV library.

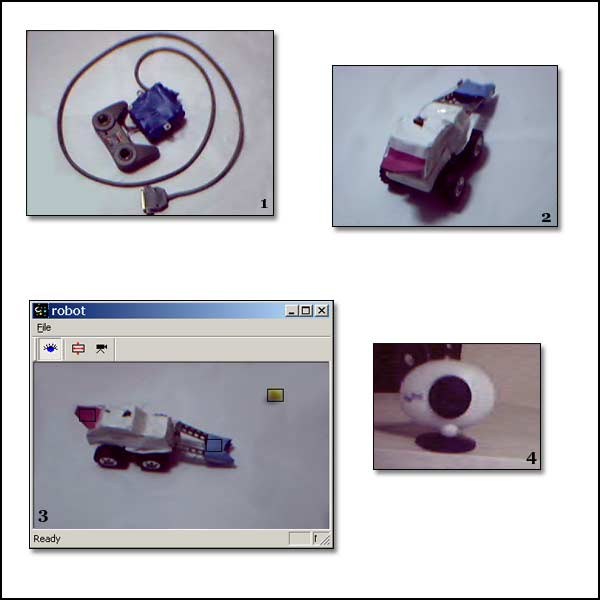

We now had input data (the pictures taken by the camera) and output

methods (the car controller). The processing itself consisted of two

algorithms: one is an object recognition algorithm, which detected

objects in the input picture and returned their coordinates. Note that

the coordinates were 2D coordinates, affected by the camera's

perspective. Therefore, we transformed the coordinated into top-view

coordinates. The second algorithm is an object navigation algorithm that

decided how to move the car according to the object-coordinates

calculated by the other algorithm.

The final result:

A ball and the car are initially placed in random locations on a flat

surface. Then, the camera is set so that the car and the ball are inside

the camera's picture. Our program, a Win32 application (Figure 3), is

started and the robot begins. The car automatically finds the ball,

approaches it, rotates with it until it points at one of the corners and

pushes the ball to the corner.

If you are interested in more information concerning the implementation

of the robot, please contact me by mail at: elikez@gmx.net.

The team: Elik Eizenberg, Michael Seldin, Sasha Kravtsov

|

|