|

Submitted by , posted on 05 June 2001

|

|

Image Description, by

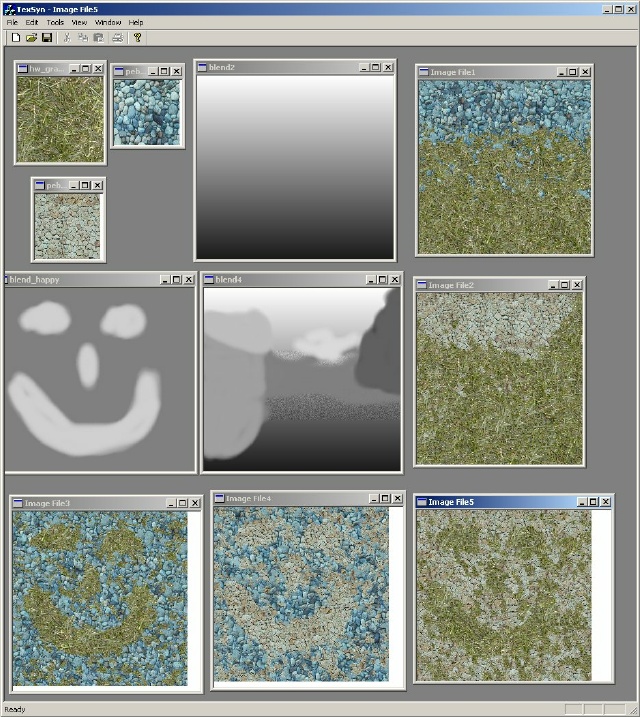

This is a screenshot of a small C++ app I wrote for a final project over at

Johns Hopkins University. Its a demonstration of texture synthesis given source

images (The 3 small images in the upper left) and a "blend" image (The grayscale

images in the center column). The algorithm I used for synthesis is based on

the paper "Synthesizing Natural Textures" by Michael Ashikhmin

(http://www.cs.utah.edu/~michael/ts/) . Whereas the original algorithm allowed

for only one source image, the modifications allow for 2 independent source

images to be used in texture syntehsis.

Here's the genearl idea of the algorithm. The output texture is created in scan

line order left to right, top to bottom. At each destination pixel in the

output texture the algorithm looks at its surrounding neighborhood of pixels and

computes the best match (L2 norm) from each source. So it computes 2 norms for

each pixel, one from source 1 and one from source 2. Typically the one with the

lowest norm would be placed at the destination pixel. However, we have the

blend image.

The blend image is something like a weighting between the norms. 0 (black)

represents 100% source 1, 0% source 2 and 255 (white) represents the inverse.

As the blending comes closer to 128 the weighting becomes 50/50. The blend

image is how we can influence what the output texture looks like, as seen in the

happy face.

The interface to this sucker is really rough. Its a barebones MFC app with some

functionality to read/write PPM files and of course to generate the texture.

The results are decent, but could do a little better. The main difficulty seem

to be getting the source images to look right toghether on the output texture.

Also very important is to have a believable blend. For some reason I don't

imagine happy faces occuring in nature =)

|

|